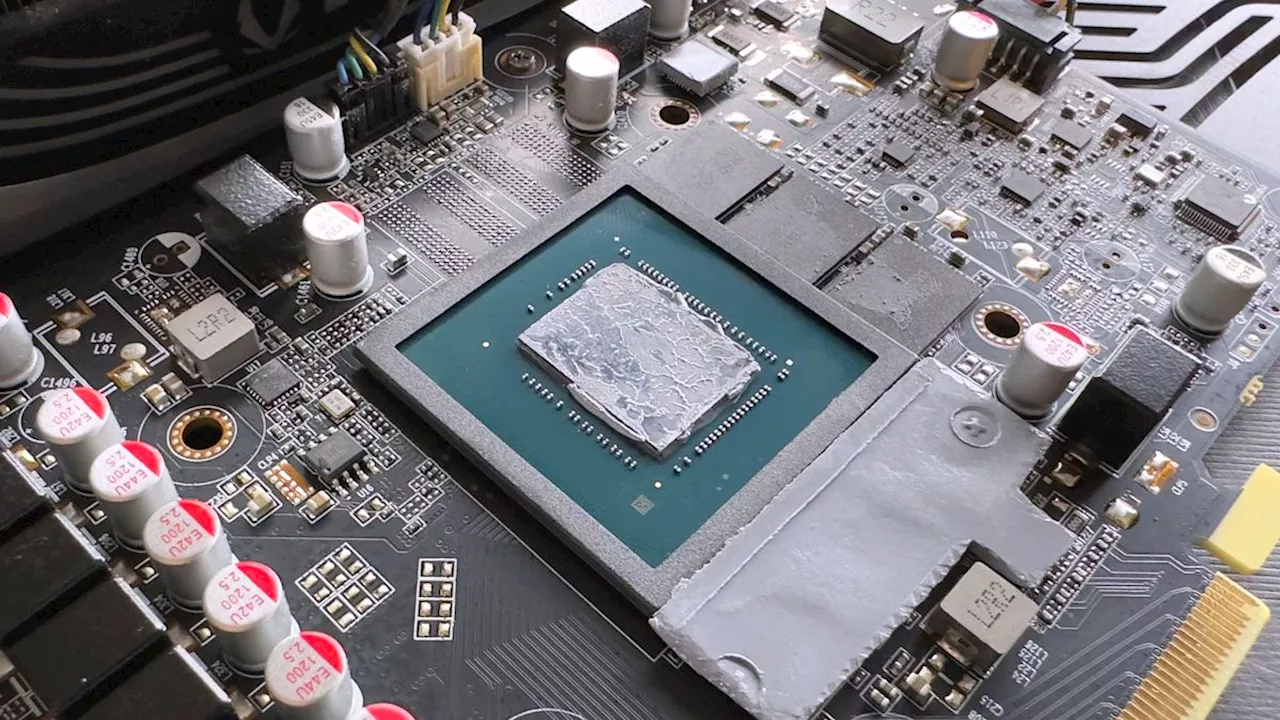

For 100 concurrent users, the card delivered 12.88 tokens per second—just slightly faster than average human reading speed

If you want to scale a large language model to a few thousand users, you might think a beefy enterprise GPU is a hard requirement. However, at least according to Backprop, all you actually need is a four-year-old graphics card.

So, it's not surprising that Backprop opted for a smaller model like Llama 3.1 8B, since it fits nicely within the card's memory and leaves plenty of space left over for key value caching., which is widely used to serve LLMs across multiple GPUs or nodes at scale. But before you get too excited, these results aren't without a few caveats.

It's also worth noting that Backprop's testing was done using relatively short prompts and a maximum output of just 100 tokens. This means these results are more indicative of the kind of performance you might expect from a customer service chatbot than a summarization app., and prompts ranging from 200-300 tokens in length, Ojasaar found that the 3090 could still achieve acceptable generation rates of about 11 tokens per second while serving 50 concurrent requests.

United Kingdom Latest News, United Kingdom Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Rumoured Nvidia RTX 4070 GPU with cheapo GDDR6 memory is now officialJeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.

Rumoured Nvidia RTX 4070 GPU with cheapo GDDR6 memory is now officialJeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.

Read more »

NVIDIA's reportedly scaling back RTX 40 series production by as much as 50% in preparation for the Blackwell RTX 50 launchNick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site.

NVIDIA's reportedly scaling back RTX 40 series production by as much as 50% in preparation for the Blackwell RTX 50 launchNick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site.

Read more »

Steam's favourite GPU, the RTX 3060, is nearing its end as Nvidia gets ready to issue the last batch of chipsNick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site.

Steam's favourite GPU, the RTX 3060, is nearing its end as Nvidia gets ready to issue the last batch of chipsNick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site.

Read more »

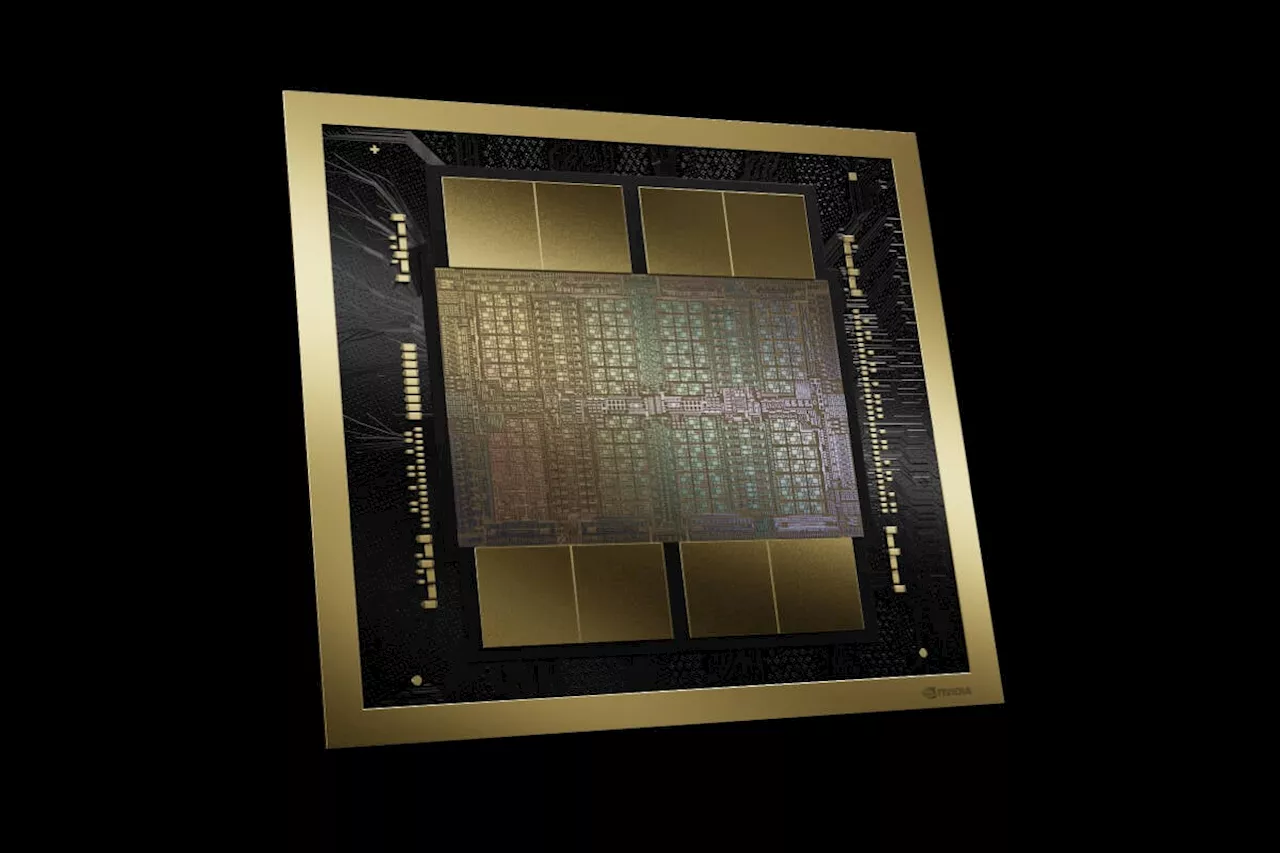

Nvidia reportedly delays Blackwell GPUs until 2025 over packaging issuesBackdrop of multi-billion dollar orders to support AI services, but unlikely to hurt NVDA long term

Nvidia reportedly delays Blackwell GPUs until 2025 over packaging issuesBackdrop of multi-billion dollar orders to support AI services, but unlikely to hurt NVDA long term

Read more »

DoJ launches probes as AI antitrust storm clouds gather round NvidiaUS regulator reportedly not happy about Run:ai buy... nor industry dominance

DoJ launches probes as AI antitrust storm clouds gather round NvidiaUS regulator reportedly not happy about Run:ai buy... nor industry dominance

Read more »

US probes Nvidia’s acquisition of Israeli AI start-upJustice department has increased scrutiny of the chipmaker’s power in the emerging sector

US probes Nvidia’s acquisition of Israeli AI start-upJustice department has increased scrutiny of the chipmaker’s power in the emerging sector

Read more »