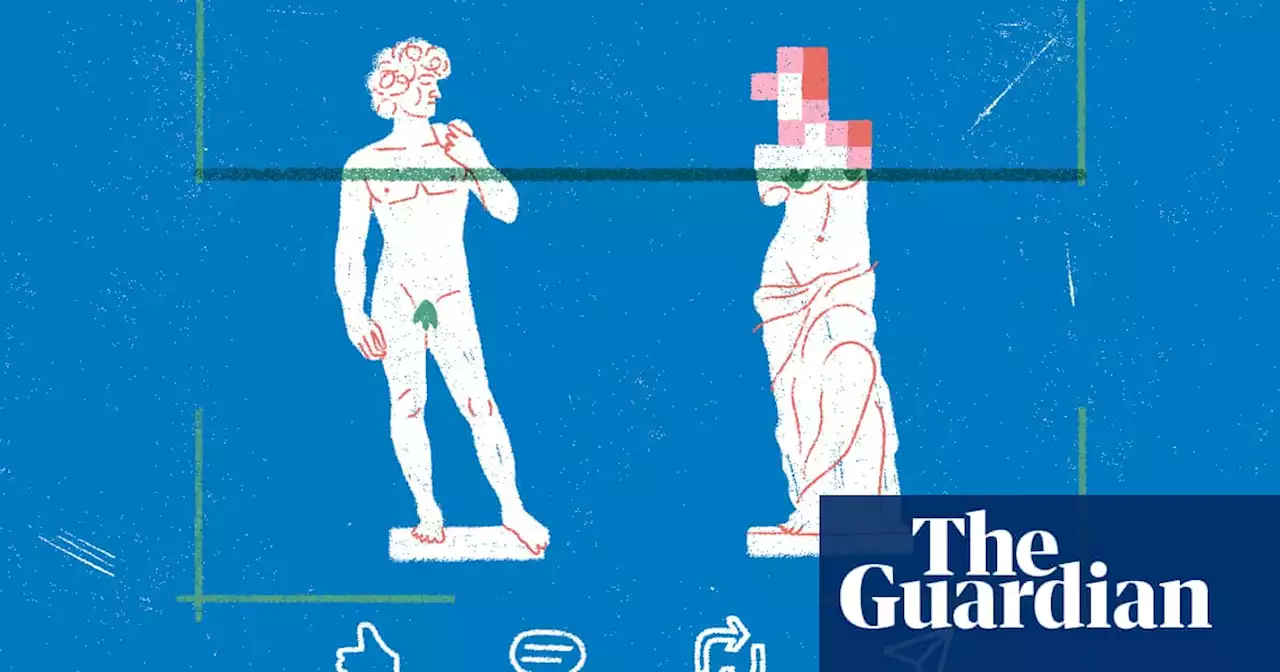

Guardian exclusive: AI tools rate photos of women as more sexually suggestive than those of men, especially if nipples, pregnant bellies or exercise is involved

mages posted on social media are analyzed by artificial intelligence algorithms that decide what to amplify and what to suppress. Many of these algorithms, a Guardian investigation has found, have a gender bias, and may have been censoring and suppressing the reach of countless photos featuring women’s bodies., are meant to protect users by identifying violent or pornographic visuals so that social media companies can block it before anyone sees it.

One social media company said they do not design their systems to create or reinforce biases and classifiers are not perfect. The photo of the women got eight views within one hour, and the picture with the two men received 655 views, suggesting the photo of the women in underwear was either suppressed or shadowbanned.Shadowbanning has been documented for years, but the Guardian journalists may have found a missing link to understand the phenomenon: biased AI algorithms.platforms seem to leverage these algorithms to rate images and limit the reach of content that they consider too racy.

Screenshots of Microsoft’s platform in June 2021 , and in July 2021 . In the first version, there is a button to upload any photo and test the technology, which has disappeared in the later version.But what are these AI classifiers actually analyzing in the photos? More experiments were needed, so Mauro agreed to be the test subject.

Ideally, tech companies should have conducted thorough analyses on who is labeling their data, to make sure that the final dataset embeds a diversity of views, she said. The companies should also check that their algorithms perform similarly on photos of men v women and other groups, but that is not always done.This gender bias the Guardian uncovered is part of more than a decade of controversy around content moderation on social media.

Google and Microsoft rated Wood’s photos as likely to contain explicit sexual content. Amazon categorized the image of the pregnant belly on the right as ‘explicit nudity’. Running some of Wood’s photos through the AI algorithms of Microsoft, Google, and Amazon, including those featuring a pregnant belly got rated as racy, nudity or even explicitly sexual.

United Kingdom Latest News, United Kingdom Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

NSW minister passed over female frontrunners in search for new Children's GuardianCommunities and Justice Minister Natasha Maclaren-Jones passed over top-ranked female candidates for the role of the independent Children's Guardian, choosing to recommend a man who never formally applied for the job.

NSW minister passed over female frontrunners in search for new Children's GuardianCommunities and Justice Minister Natasha Maclaren-Jones passed over top-ranked female candidates for the role of the independent Children's Guardian, choosing to recommend a man who never formally applied for the job.

Read more »

Britain, we had a thing with Truss and Johnson but it was toxic and we were right to end it. Just walk away | Marina HydeBoth blame us for the breakdown and want to try again, but that just speaks to their self-delusion, says Guardian columnist Marina Hyde

Britain, we had a thing with Truss and Johnson but it was toxic and we were right to end it. Just walk away | Marina HydeBoth blame us for the breakdown and want to try again, but that just speaks to their self-delusion, says Guardian columnist Marina Hyde

Read more »

GPs are under enormous pressure – but mine is still somehow brilliant | Zoe WilliamsGeneral practice is overstretched and chronically underfunded, but many doctors still offer an almost magically good service, writes Guardian columnist Zoe Williams

GPs are under enormous pressure – but mine is still somehow brilliant | Zoe WilliamsGeneral practice is overstretched and chronically underfunded, but many doctors still offer an almost magically good service, writes Guardian columnist Zoe Williams

Read more »

After Brexit, Britain’s competitors are running rings around us. Sunak’s not even at the races | Rafael BehrThis reshuffle will make little difference: we’re going nowhere as the PM leads us further down an economic dead end, says Guardian columnist Rafael Behr

After Brexit, Britain’s competitors are running rings around us. Sunak’s not even at the races | Rafael BehrThis reshuffle will make little difference: we’re going nowhere as the PM leads us further down an economic dead end, says Guardian columnist Rafael Behr

Read more »

From Out of Sight to Magic Mike: our writers pick their favourite Soderbergh filmsWith the release of Magic Mike’s Last Dance, Guardian writers argue why their Steven Soderbergh pick is better than the rest

From Out of Sight to Magic Mike: our writers pick their favourite Soderbergh filmsWith the release of Magic Mike’s Last Dance, Guardian writers argue why their Steven Soderbergh pick is better than the rest

Read more »

Be warned: the next deadly pandemic is not inevitable, but all the elements are in place | George MonbiotBird flu is a mass killer, and mink farms are perfect for infection and transmission. They are a grave threat and must be banned, says Guardian columnist George Monbiot

Be warned: the next deadly pandemic is not inevitable, but all the elements are in place | George MonbiotBird flu is a mass killer, and mink farms are perfect for infection and transmission. They are a grave threat and must be banned, says Guardian columnist George Monbiot

Read more »