Find out how the Intel Xeon 6 with P-Cores makes the case for the host CPU

Since OpenAI first released ChatGPT into the world two years ago, generative AI has been a playground mostly for GPUs and primarily those from Nvidia, even though graphics chips from others and AI-focused silicon have tried to make their way in.

CPUs haven't been sidelined in the AI era; they've always played a role in AI inferencing and deliver greater flexibility than their more specialized GPU brethren. In addition, their cost and power efficiency play well with those small language models that carry hundreds of millions to fewer than 10 billion parameters rather than the billions to trillions of parameters of those power-hungry LLMs.

At the Intel Vision event in April, Intel introduced the next generation of its datacenter stalwart Xeon processor line. The Intel Xeon 6 was designed with the highly distributed and continuously evolving computing environment in mind, including two microarchitectures rather than a single core. In June, Intel introduced the Intel Xeon 6 with single-threaded E-cores for high-density and scale-out environments like the edge, IoT devices, cloud-native, and hyperscale workloads.

The high system memory capacity also ensures there is enough memory for large AI models that can't fit entirely in GPU memory, guaranteeing flexibility and high performance. Intel is first to market with MRDIMM support, which comes with a strong ecosystem backing by the likes of Micron, SKH, and Samsung.

United Kingdom Latest News, United Kingdom Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

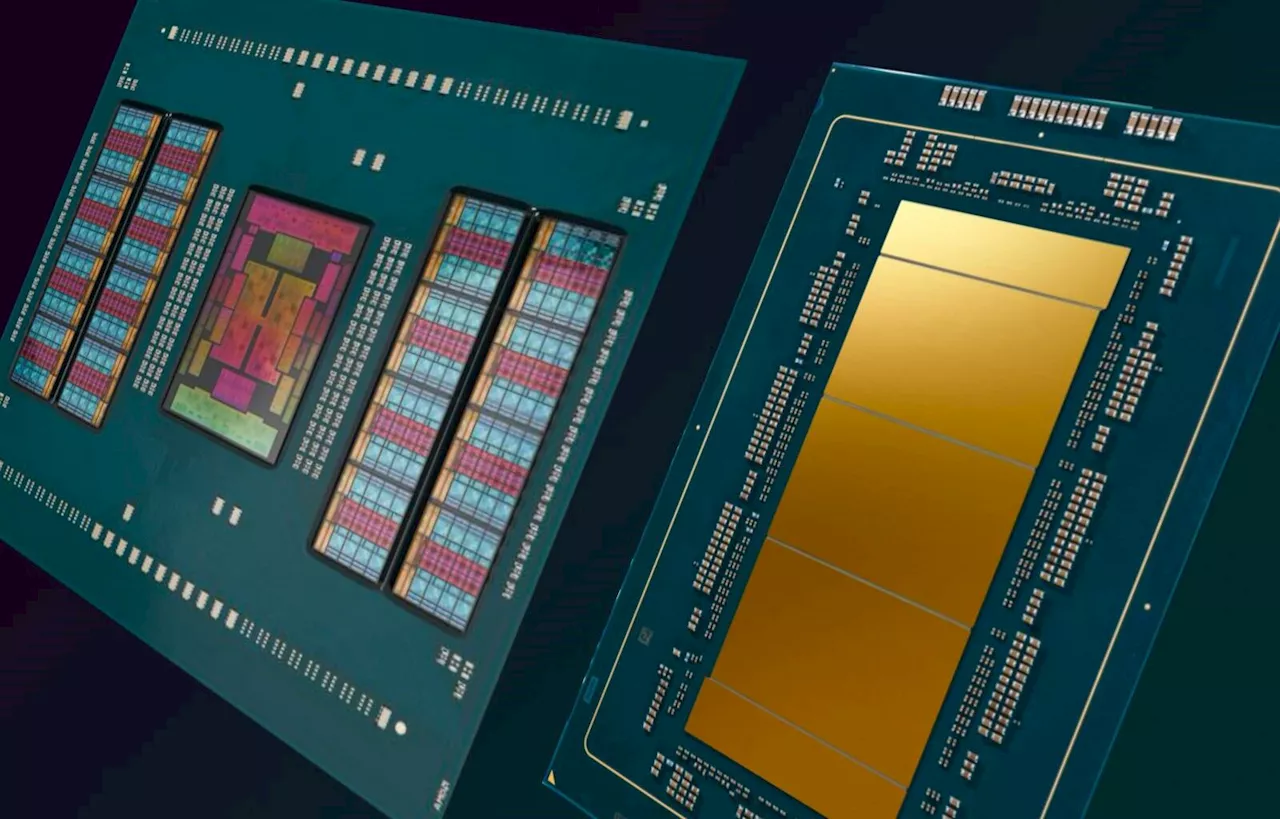

A closer look at Intel and AMD's different approaches to gluing together CPUsEpycs or Xeons, more cores=more silicon, and it only gets more complex from here

A closer look at Intel and AMD's different approaches to gluing together CPUsEpycs or Xeons, more cores=more silicon, and it only gets more complex from here

Read more »

Fujitsu, AMD lay groundwork to pair Monaka CPUs with Instinct GPUsBefore you get too excited, Fujitsu's next-gen chips won't ship till 2027

Fujitsu, AMD lay groundwork to pair Monaka CPUs with Instinct GPUsBefore you get too excited, Fujitsu's next-gen chips won't ship till 2027

Read more »

Nvidia's latest Blackwell boards pack 4 GPUs, 2 Grace CPUs, and suck down 5.4 kWYou can now glue four H200 PCIe cards together too

Nvidia's latest Blackwell boards pack 4 GPUs, 2 Grace CPUs, and suck down 5.4 kWYou can now glue four H200 PCIe cards together too

Read more »

Fujitsu delivers GPU optimization tech it touts as a server-saverMiddleware aimed at softening the shortage of AI accelerators

Fujitsu delivers GPU optimization tech it touts as a server-saverMiddleware aimed at softening the shortage of AI accelerators

Read more »

Nvidia continues its quest to shoehorn AI into everything, including HPCGPU giant contends that a little fuzzy math can speed up fluid dynamics, drug discovery

Nvidia continues its quest to shoehorn AI into everything, including HPCGPU giant contends that a little fuzzy math can speed up fluid dynamics, drug discovery

Read more »

No-Nvidias networking club convenes in search of open GPU interconnectUltra Accelerator Link consortium promises 200 gigabits per second per lane spec will debut in Q1 2025

No-Nvidias networking club convenes in search of open GPU interconnectUltra Accelerator Link consortium promises 200 gigabits per second per lane spec will debut in Q1 2025

Read more »